One man team: multi-agent workflows

Sourced by A/Prof Vanessa Panettieri, Editor (Educational), AFOMP Pulse

Physical Sciences, Peter MacCallum Cancer Centre, Australia

Large language models (LLMs) like ChatGPT have completely changed how we interact with computers. Tools such as MidJourney and Sora take this even further into creative spaces, generating incredibly realistic images and videos. But most AI models stop at creating content – when it comes to getting things done, we need more. Imagine planning a trip to Korea: I don’t just want an AI to suggest an itinerary and find flight options; I want it to actually book the tickets for me, like a personal assistant would. Simply put, we need AI that does things, not just talks about them. That’s where agentic AI comes in.

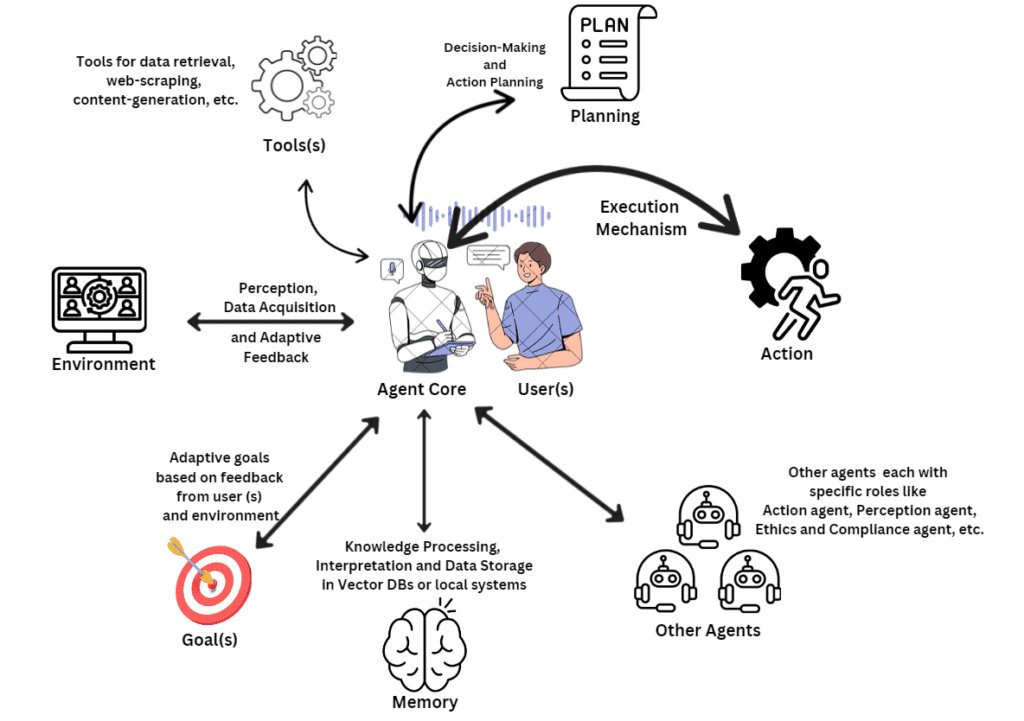

So, what exactly is an AI agent? It’s surprisingly simple: just an LLM assigned a specific job. Take our travel example: if I task an LLM with booking my flight to Korea, that LLM instance becomes my ticket agent. What makes this powerful? First, unlike older rule-based tools, LLMs understand natural language commands and can make decisions. No more rigid, programming-like instructions. Second, we can give these agents permissions – the digital keys they need to actually do things. My ticket agent might get access to the web to search flights and (securely!) use my payment details to book them. These permissions are called “tools,” and agents can activate them as needed. To make this tool-calling seamless, the industry created the Model Context Protocol (MCP). Think of MCP as the “USB standard” for agentic AI: any tool or agent using this protocol can connect and work together, no matter who built them. This interoperability unlocks huge potential in real-time decision-making, scalable automation, improved resource allocation and faster problem-solving.

While a single AI agent handles simple jobs well, imagine scaling this up inside a travel agency. Tasks grow increasingly diverse and complex over time. The AI agent might start by booking tickets and handling calls, but soon it’s also calculating revenue, filing taxes, and more. Eventually, the agent drowns in choices – juggling too many tools and burning resources just deciding what to do next. That’s where multi-agent workflows shine. Instead of one overwhelmed AI, you deploy a team of specialised agents: a booking expert, a finance specialist, a taxation officer – each mastering their own domain. Crucially, a single human can oversee this entire AI team, creating what’s essentially a solo-man (with multi-agent) workforce.

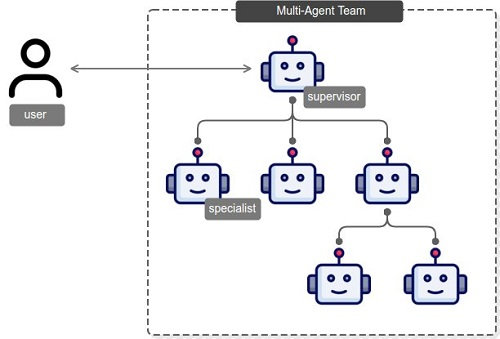

So how do we coordinate all these agents? A simple linear chain—where one agent hands off to the next works fine for step-by-step tasks. But for complex, real-world challenges? Think about a business. Just as a company uses managers, analysts, and specialists to tackle different parts of a project, multi-agent AI workflows deploy a hierarchy:

- A supervisor agent acts as the mission commander (also the main contact with the human user), breaking down complex requests, .

- It delegates subtasks to specialist agents who actually work on the jobs.

- If a task requires deeper expertise, specialists can even call upon expert agents creating a dynamic problem-solving chain.

In a hypothetical medical scenario, a supervisor agent could act like a GP (general practitioner) delegating diagnostic tasks to specialized agents (like radiologists or cardiologists) and even calling in expert agents for rare conditions. Overall, this mirrors how human organizations scale efficiently: collaboration through clear roles and delegation.

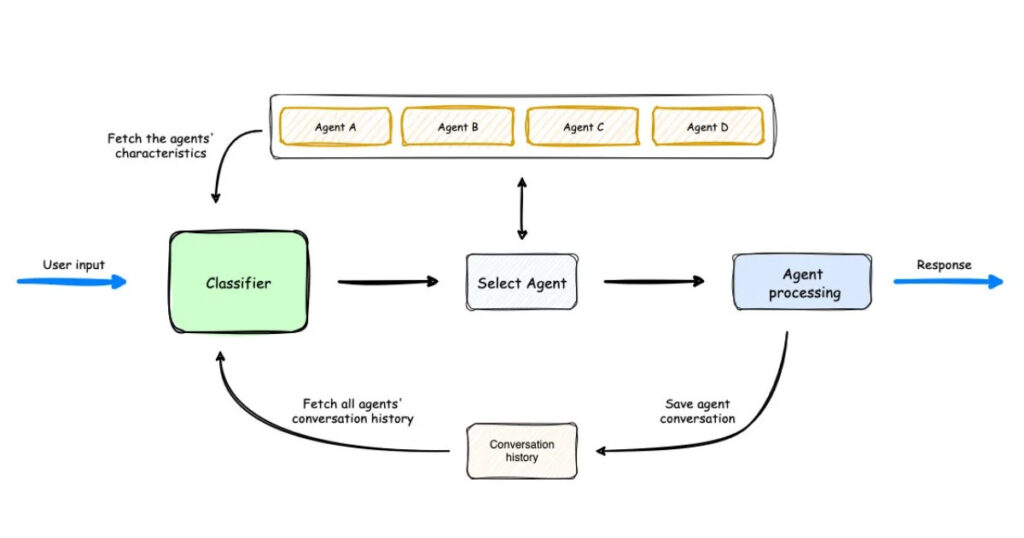

Here’s the best part: just like no two companies are structured identically, there’s no single “right” way to design a multi-agent workflow. The possibilities are truly endless. You, as the CEO of all these agents, can devise the best organisational chart for your task. The routing can be customised. The following example shows a workflow design to select the most suitable agent (e.g. a chatbot) based on previous conversional characteristics.

Is this hard to implement? Actually no. If you know some programming, you can build your team of agents using open-sourced tools such as LangChain and commercial platforms such as Amazon Bedrock, AgentFlow, CrewAI and AutoGen.

But what’s the catch? Safety. When we give AI agents real-world permissions, their actions aren’t constantly monitored. And just as they scale productivity, they can also scale risks. An email agent could flood inboxes with spam; a booking bot might overspend on luxury flights. In extreme cases like granting an AI control over critical military systems the Hollywood nightmare of a rogue AI becomes plausible. So how do we stay safe? Humans can’t supervise AI acting at digital speeds we need AI peers. Imagine an AI safety committee: specialized agents monitoring others for harmful actions, like auditors in real-time. But this raises a profound question: Are we then at the mercy of the AI guardians? We don’t have all the answers yet. Imagine, before industrial cranes first revolutionized construction, workers had no safety standards for these future machines either. They built them along the way. And we will too.

(AI was used in production of this article, especially to get advice on AI safety.)